The Housework Theory of Productivity

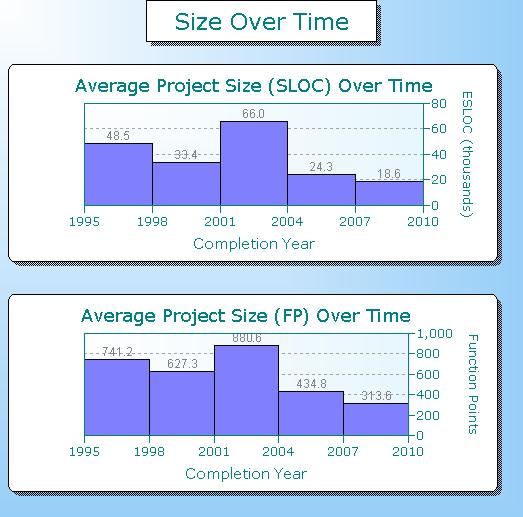

Since we're speculating about why (in the aggregate at least) software productivity appears to have declined over time, here's another possible cause. I call it The Housework Theory of Productivity.

Back in great-Grandma's time, cleaning was a labor intensive endeavor. Rugs were swept once or twice a week and taken outside and beaten by hand once a year. Those cheery little scrubbing bubbles weren't around to whisk the pesky soap scum from our bathtubs - they hadn't been invented yet. When it came to cleaning, our aspirations were limited by the time and effort required to complete even the simplest tasks.

Back in great-Grandma's time, cleaning was a labor intensive endeavor. Rugs were swept once or twice a week and taken outside and beaten by hand once a year. Those cheery little scrubbing bubbles weren't around to whisk the pesky soap scum from our bathtubs - they hadn't been invented yet. When it came to cleaning, our aspirations were limited by the time and effort required to complete even the simplest tasks.

Over time various "labor saving" devices ushered in heretofore unheard of levels of springtime fresh cleanliness. But there was a downside to these advances: as our ability to get things clean increased, so did our expectations. The work expanded to fill the time (and energy) available to us.

If we were to compare two software projects that are same size and perform the same function (but were completed 20 years apart), we'd likely find the modern billing system smaller in size but far richer in features. It would also be more complex, both from an algorithmic and an architectural standpoint. Because today's programming tools and languages make it possible to deliver more value per line of code or function point, our standards have risen. We expect more for our time and money.