In a post for The Guardian's Datablog, Jonathan Grey explores the rise of data journalism. Data journalism is "a journalistic process based on analyzing and filtering large data sets for the purpose of creating a new story. Data-driven journalism deals with open data that is freely available online and analyzed with open source tools.

Although data is a powerful tool, Grey reminds readers that it's not a silver bullet and counters some commonly held data myths.

Data is not a perfect reflection of the world.

Grey states that, "Data is often incomplete, imperfect, inaccurate, or outdated. It is more like a shadow cast on the wall, generated by fallible human beings, refracted through layers of bureaucracy and official process." In my experience with the QSM Database validation effort, this is true. In the past few months, I have reviewed customer-submitted databases for inclusion in the database, and in that time, I've seen some crazy projects (50000 SLOC in 3 days?). The Data Validation feature in SLIM-DataManager is really useful for plucking out these anomalies so that you can either exclude them from your database entirely or bring them back to the project manager for verification. It's important to keep in mind that mistakes can and do exist in any database, no matter how well-scrubbed it is. One way you can make this process easier is to use SLIM-Metrics to create a validation workbook. In the workbook, you should create queries and reports that mirror SLIM-DataManager and focus on the extremes - for example, a PI less than 1 or a PI greater than 26. It's not that a PI less than 1 or greater than 26 are necessarily impossible values, but it's a good idea to find anomalies in order to take a second look at them and make sure that the SLIM-DataManager entry is filled in correctly.

Data needs to be carefully managed in order to maintain quality. Crowdsourced data may have a few reliable gems, but it is easily susceptible to inaccurate data because no one is in charge of managing it. Think about Wikipedia. Anyone can write a Wikipedia entry, which means that anyone can write a Wikipedia entry. It's important to check sources of information for reliability so that you can base your estimates on the best history available.

Data is not power.

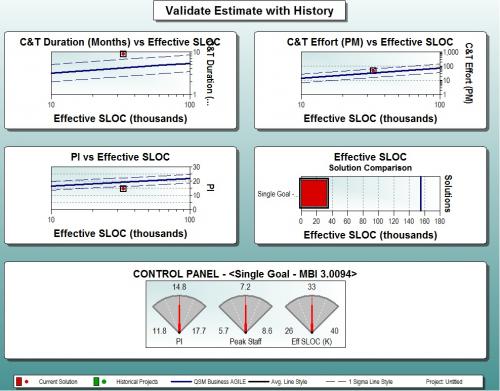

This is one lesson that I disagree with completely. Data IS power and QSM's database of completed projects can prove it. One significant thing you can grow with data is the trend line. QSM has been collecting and analyzing its database of historically completed software projects since 1978. The trend lines provide an easy way to visualize how key management numbers in terms of size versus schedule, effort, and MTTD. Without QSM's database to "grow" these trend lines, organizations would be required to collect data and create their own trend lines for analysis from the very start. Industry data also provides ways to sanity-check your estimates, compare actuals to past performance, calibrate future estimates, and benchmark. Without data, software estimation would be much riskier.

Industry data can also help fill in gaps in your organization's own history. For example, an organization that is transitioning to Agile might have a large database of waterfall projects, but no Agile projects. They can use their own database for traditional estimation and use QSM's Agile trends to bootstrap their Agile benchmarking and estimation processes until they have sufficient Agile data in their own database.

While Grey is talking about Big Data and the proliferation of data in day to day life, data (for the software industry, at least) is NOT easily attainable. When I first started working for QSM, I couldn't understand why everyone wasn't maintaining their own historical databases, but as I've gained more experience, I have a better understanding. For some organizations, there just isn't time at the end of the project's lifecycle to gather actuals because once the project is over, it's time to start a new project. I've written a post about how you can create an effective project closure checklist so that actuals are captured and other posts about the importance of collecting your own historical data, but we live in an imperfect world.

Grey hit the nail on the head when he said that, "Data can be an immensely powerful asset, if used in the right way. But as users and advocates of this potent and intoxicating stuff we should strive to keep our expectations of it proportional to the opportunity it presents." It's important to treat data like any other tool and to examine its uses and shortcomings. Data should never be used to punish. For example, if a project had a very low PI, the team shouldn't be punished, instead, project managers could try to find out why that happened and perhaps, collect qualitative data. Maybe the project had a low PI because the team was required to stop working on it or for some other reason. Data should be used as a tool for measurement and improvement, to make things better, not as a weapon.