How Does Uncertainty Expressed in SLIM-Estimate Relate to Control Bounds in SLIM-Control? Part III

In the previous articles in this series I presented SLIM-Estimate’s use of uncertainty ranges for size and productivity to quantify project risk, and how to build an estimate that includes contingency amounts that cover your risk exposure. In this post I will identify the project work plan reports and charts that help you manage the contingency reserve. You will see how to use SLIM-Control bounds and default metrics to keep your project on track.

Understand the project work plan documents.

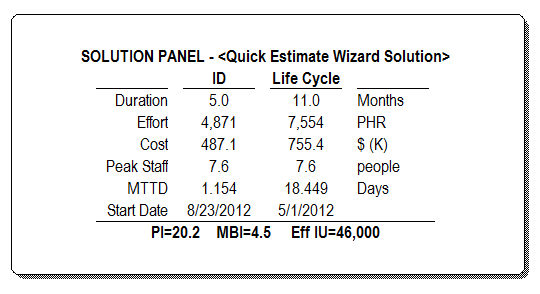

In our example so far, you have estimated a project to deliver a software product in 11.7 Months, with a budget of $988,223. This estimate includes an 80% contingency reserve, or risk buffer, on both effort and duration. Your work plan is based upon SLIM-Estimate’s 50% solution; 11 Months and $755,400. Thus, the uncertainty about size and productivity are accounted for; it is built into your plan. The probability that you will meet the project goals is driven by many factors ‒ too many to measure. You can only manage what is within your control, and escalate issues so they can be resolved in a timely manner.

Managing the project well begins with a solid understanding of the detailed project plan. SLIM-Estimate provides several default and customizable charts and reports that document the plan. Here are a few key reports1 to study in order to identify the core metrics you will want to monitor closely.